Solutions

Customer Support

Resources

Should you build or buy? What will actually move the needle for your legal team? And what should you have in place before rolling anything out?

In this comprehensive guide, we cut through the noise. Whether you're a seasoned GC or just starting your legal ops journey, you’ll find practical advice on evaluating Legal AI, making smart decisions, and avoiding costly missteps.

What started as cautious experiments with clause extraction or keyword tagging has evolved into a wave of transformative legal AI technologies, from generative AI drafting entire contracts to agentic tools adding redlines to legal documents autonomously.

What once sat on innovation roadmaps is now in production, powering workflows across in-house teams, law firms, and legal tech providers.

The early 2010s saw a flurry of interest in AI for law, mainly rule-based engines and machine learning tools that could parse documents or score risk. But adoption was slow. Tools were clunky. Legal work was too nuanced, too contextual — and frankly, most AI wasn’t good enough to trust.

Then came 2022. The release of large language models (LLMs) like GPT-3.5 and GPT-4 changed the equation. Now we have ChatGPT-5. For the first time, lawyers could interact with AI in natural language — and the AI could respond with outputs that felt not just usable, but genuinely useful.

Suddenly, lawyers didn’t need to be able to code to benefit from AI. They just needed a question, a clause, or a contract.

That leads us nicely into where we are today, with more than 90 per cent of in-house lawyers using these generative AI tools either daily or weekly, and almost all of the lawyers we recently surveyed saying they believe that AI will change their job over the course of a year.

In fact, a survey by LexisNexis revealed in-house corporate legal teams to be leading the charge with their adoption of AI, with 72 per cent already using it in their work, and a further 25% planning to. That’s 26 per cent higher than the industry average (including law firms, public sector, and the bar).

There’s been no shortage of noise about AI replacing lawyers. It’s a conversation that’s sparked anxiety in the legal profession for years, and understandably so.

But at Juro, we don’t believe it’ll go that way.

AI isn’t replacing lawyers — it’s making them faster, sharper, and more effective at what they do best. For in-house teams, that means fewer manual tasks, shorter contract cycles, and more time to focus on the strategic work that actually moves the needle for the business.

Legal AI is at its best when it’s helping lawyers get rid of the repetitive, time-consuming work — the boilerplate, the clause comparisons, the endless formatting — and letting them set the rules that AI then follows. It’s not about giving up control. It’s about applying your judgement where it counts, and letting legal automation do the rest.

Essentially, this benefits lawyers in three key ways.

By taking care of the high-volume, low-value tasks — like reviewing standard terms, checking for clause consistency, or approving routine contracts like NDAs — AI frees up legal teams to focus on the work that really needs their input: advising on risk, negotiating complex deals, and shaping business strategy.

By doing this, legal AI shifts legal’s role from reactive support to proactive partner: advising on business risk, navigating complex deals, and contributing to strategic decisions without being buried in admin.

Legal AI tools can analyse large volumes of contract data quickly — pulling trends, identifying anomalies, and surfacing hidden risks across your contract portfolio.

For example, you can instantly see how termination clauses vary across hundreds of MSAs, or which contracts are missing key obligations. That kind of insight used to take days, but now it takes seconds.

AI reduces the need for external legal spend on routine matters — like contract review, redlining, and first-pass due diligence because it automates it.

It also cuts internal time spent on manual legal work, helping smaller teams support growing businesses without scaling headcount at the same pace. The net result: a more efficient legal function that delivers more value, without the extra cost.

This can present itself in a few ways:

Not long ago, I sat down with Vanessa Fagard, FP&A Manager at Convious, to discuss the second kind. She shared how Juro’s AI assistant translated contracts into four different languages in a matter of minutes:

It may feel early, but it’s already clear that legal and commercial teams are benefitting immeasurably from legal AI across a wide range of tasks, from drafting to translations and even the automation of post-signature contract management.

Read more:

Legal AI has matured from a niche experiment into a core capability. Today, it’s not just helping lawyers work faster, it’s transforming how legal teams operate, how they collaborate with the business, and how they deliver value at scale.

Below are the key ways in-house legal teams are already putting AI to work — not just in contracts, but across the full legal function — along with the tools helping them do it.

Click each card to read more about the use cases, applications, and market-leading vendors in each category of legal AI tools.

Read more:

The legal AI market is booming, but it’s also fragmented, fast-moving, and often hard to decode.

What’s the difference between an AI assistant and a contract lifecycle platform? Between copilots and agents? Between a “legal AI tool” and just good software that happens to use AI?

In reality, not all legal AI tools are created equal. And when you’re choosing a platform to bet on understanding what category a tool sits in — and what problem it’s best at solving — is just as important as what tech it uses under the hood.

Broadly speaking, legal AI tools can fit into a few categories…

Unlike many tools that fit neatly into these boxes, Juro doesn’t just plug into one stage of the contract lifecycle — it combines functionality from multiple legal AI software categories into a single, browser-based platform, helping fast-scaling businesses manage contracts more efficiently from end to end.

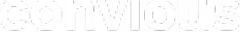

Juro empowers business teams to generate compliant contracts in seconds through structured templates and natural language AI prompts.

Whether using smartfields, dropdowns, or AI-assisted clause generation, users create contracts without needing legal to get involved in every draft.

Juro’s AI reviews contracts in-browser, providing instant redlines, risk flags, and fallback suggestions aligned with your contract playbook. Best of all, this agentic AI means this all happens autonomously.

This reduces legal review cycles and helps business teams close deals faster, even on third-party paper.

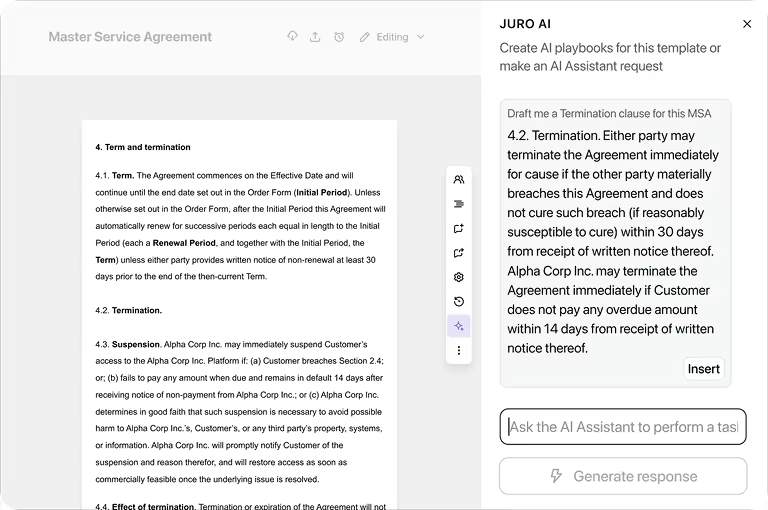

Juro’s AI identifies and pulls out key terms, clauses, and key metadata from both legacy and in-flight contracts.

This eliminates the need for manual tagging and makes contract data searchable, actionable, and audit-ready from day one, even for contracts on the other party’s paper.

With structured contract data captured at creation and enriched via AI, Juro delivers real-time insights into contract volumes, renewal deadlines, and more.

All of this data is collated and used to filter, track, and monitor contracts at scale.

We’re in an era where AI doesn’t just help with tasks — it completes them. This is better known as agentic AI.

Agentic AI refers to intelligent systems that can independently complete entire workflows, like reviewing a contract, redlining risky clauses, routing it to stakeholders, and even triggering approval flows.

Unlike simple prompt-based assistants, agentic tools make decisions based on context, user intent, and prior interactions — transforming them into digital teammates, not just tools.

Jake Jones, Co-founder of Flank, an AI agent, shared his great definition of agentic AI on our podcast recently:

Agents aren’t to be confused with copilots, though. There are some important distinctions between the two. Agents can perform tasks autonomously, whereas copilots typically won’t.

Let’s take a common AI use-case in legal: reviewing a contract. A copilot might read your contract and recommend stuff for you to do with it. Agree with this clause interpretation, action this reminder date, flag this deviation from your standard playbook, and so on.

Meanwhile, an agent might read your contract, know all the context in the same way a copilot does - and instead of recommending actions, it takes them. It just does it.

That’s what we’re delivering to in-house legal and business teams.

Juro’s AI contract review functionality enables teams to review and redline contracts with AI agents, meaning anyone can get approved redlines in a matter of minutes, without your GC on hand.

The contract review agent can handle entire workflows: reviewing third-party contracts against your positions, applying redlines, notifying legal if something falls outside the rules, and escalating only when needed.

Post-ChatGPT, it suddenly felt like every team could (and maybe should?) build their own legal AI tool. Open-source models were accessible, APIs were everywhere, and prompts became a new language.

It’s not quite the same as when YouTube made everyone a creator, but it’s close. So if software development has been democratized, what does it mean for one of the oldest tensions in legal technology: do you buy, or do you build?

If your pain point is summarizing a document, you probably don’t need legal tech: you need ChatGPT. If you want to build a ticketing system, you don’t need legal tech: you need Zapier.

So far, so good. But … when you reach a certain level of complexity, or risk, or monetary value … your vibe-coded solutions will start to creak. And then break. You need to consider how much of your time you’re realistically comfortable investing into developing and maintaining a custom-built solution.

But above all else, you need to decide where legal tech vendors can and should be adding value that can’t easily be reproduced with off-the-shelf tools.

In legal, trust isn’t a nice-to-have… it’s non-negotiable.

AI that delivers outputs without explanation — the so-called "black box" — might fly in consumer tools, but it simply doesn’t pass muster in a legal context. If your AI recommends deleting an indemnity clause, the very first question will be: why?

In practice, this means legal AI tools must now do more than provide answers. They need to:

Explainability transforms AI from an output engine into a partner in legal reasoning, and it’s the perfect aid for commercial teams that need to relay the explanation for redlines shared with counterparties, for example, without the support of an in-house lawyer.

We speak to in-house lawyers comparing and trialling the latest developments in legal AI daily, so we know what matters most to you. We also know that the landscape is a confusing one, with lots of distinct offerings buried in jargon.

So, here’s a framework we’ve developed to help you understand how to evaluate legal AI tools across the market.

We’ve curated the questions we’ve heard from in-house lawyers first-hand to give you a better idea of what others in your position are already asking:

• Can the tool review from a contract repository, or does it need manual uploads?

• Do you have any accuracy benchmarks you can share?

• Can it handle general review without a playbook?

• How does it detect risks not explicitly defined in the playbook?

Avoids relying on partial coverage or manual effort that erodes ROI

• Is there a default playbook or must we build from scratch?

• Can the vendor assist in creating playbooks and guardrails?

• Should vendors provide guidance on market practice positions?

• How customizable are playbooks?

Playbook quality determines AI quality; support here accelerates adoption

• Can the tool integrate with tools my team already use, like Word, Slack & CRMs?

• How are contracts searchable post-signature?

Prevents data silos and enables portfolio-level insight

• If the AI flags non-conformities in a self-service workflow, how is the approver notified?

• How does the tool mitigate risk when non-lawyers use it?

• Does the platform provide reasoning along with the decisions it makes for transparency?

Ensures business speed doesn’t come at the expense of compliance

• Where is the data processed and stored?

• Does the vendor train models on our data?

• How are access controls managed?

• Is there a documented data retention/deletion policy?

Contracts often contain sensitive or regulated information — poor controls here can cause legal, reputational, and regulatory risk

• Is the AI trained for multiple jurisdictions?

• How does it stay current on legal changes?

• Can it be used for documents in other languages?

Critical for global or cross-border teams

• Are there plans to expand into areas like due diligence questionnaires or DPIAs?

• Can the AI handle both playbook-driven and open-ended review?

• What other features are on your roadmap?

Tests future-proofing and versatility

• If budget allows for either AI or a junior lawyer, how do they compare on throughput, accuracy, and coverage?

• How much time and resource needs to be invested on our part to make it operational?

Forces a real ROI conversation

As legal AI tools become more capable, it’s essential to stay grounded in the risks as well as the rewards.

For in-house legal teams, that means thinking carefully about how AI is deployed and where the boundaries should be.

Who’s responsible if the AI makes a mistake? How do you validate outputs? What data is being used to generate results? Should that data be inputted at all, and if so, how is it protected?

.avif)

These aren’t hypothetical concerns. They are questions legal teams need to answer today.

But how can they do that when regulators themselves appear to be falling behind? When we surveyed in-house lawyers earlier this year, the vast majority believed that regulators had either little or no understanding of the technology they’re regulating. In fact, 66 per cent felt that way.

However, that doesn’t excuse lawyers from their duty to use AI responsibly and enforce that same expectation across their business.

After all, we’re already seeing high-profile cases whereby companies leveraging AI in contentious ways have faced the letter of the law:

Meta & Anthropic have been in court defending their use of books to train AI following claims of copyright breaches

Disney and Universal decided to sue AI firm Midjourney claiming that "innumerable" copies of their characters have been reproduced by the AI image generator

Questions have been raised about AI’s responsibility in psychological harm cases following the death of a teenager that became obsessed with a chatbot

And that’s not all. New data has revealed that entry-level roles have dropped by a third since the launch of ChatGPT, meaning the advent of widely available AI tools has already had a real and measurable impact on the career prospects of the younger generation. This is true even in larger, well-resourced organizations like the UK’s Big Four, which are slashing graduate roles.

Add to that the fact that AI runs on energy, not magic, and it’s easy to visualize the impact AI has, and will continue to have, on environmental resources too:

“Most large-scale AI deployments are housed in data centres, including those operated by cloud service providers. These data centres can take a heavy toll on the planet.”

Why are we labouring these points in a guide about legal AI? Well, it’s easy to think of legal AI as just another tooling decision: which solution should we buy to speed up contract reviews?

But the role of in-house legal goes far deeper than procurement. As AI reshapes how businesses build, operate and sell, legal sits at the centre of that change — advising on risks, enabling innovation, and setting the rules for responsible use.

Ultimately, any in-house lawyer should have these three questions front of mind:

If your business is developing AI products, legal has a key role in ensuring they're safe, compliant, and transparent from the outset. That means advising on IP rights, user consent, model explainability, and how risks are disclosed. It's not just about checking the box — it’s about helping build trust into the product.

Here are some fantastic resources we've read on the topic of building responsible AI:

AI is being used across the business — by marketing, HR, sales, and ops. Legal teams should take the lead on setting internal guardrails: what’s allowed, what’s not, and where human oversight is required.

That might mean drafting acceptable use policies, creating risk classifications for AI tools, or setting review thresholds for automated decisions.

We know by now that AI systems can inject bias into a decision-making process, just like humans can. In fact, studies have revealed that bias within AI often amplifies our own bias.

If those systems impact hiring, pricing, or customer interactions, the legal risks are very real, meaning in-house teams should proactively help ensure that fairness is baked into implementation.

That includes reviewing training data, questioning outputs, and making sure AI isn’t reinforcing inequalities, even unintentionally.

Our wonderful panel recently explored the practical implications of implementing responsible AI policies. Kosta Starostin, VP Legal at Cohere, Annick O'Brien, GC at Cybsafe and Michael Haynes, GC at Juro all shared their insights, which you can rewatch here.

Successful adoption isn’t just about picking a vendor or switching on a feature. It requires thoughtful planning, stakeholder buy-in, and iterative learning.

We speak to lawyers daily, so we have heard firsthand that a lot of legal tech vendors are still failing their customers when it comes to adoption.

For vendors that don’t have the right foundations in place, the introduction of AI can jeopardize adoption further by adding complexity instead of clarity.

Here’s how experts in forward-thinking legal teams are making AI stick — and how you can too:

AI isn’t a magic wand and it shouldn’t be adopted for its own sake. We know that contradicts what most organizations are hearing today, but the most effective implementations begin with a focused use case:

Like any project, begin by defining a pain point with measurable outcomes in mind, then explore how AI can address it. This is crucial because it enables you to identify which solutions actually solve your problems best, rather than which ones have the most hype.

In our recent podcast episode, Lucy Bassli, explained this brilliantly:

You don’t need to spend six months building clunky workflows in a legacy CLM to succeed with legal AI. But you do need to invest time and attention into the right things:

After all, your output will only be as strong as your input, regardless of which tool you use. There’s little use investing in an expensive AI redlining solution only for it to mark your contracts up against guardrails that don’t match your own. Take the time to customize your workflows. You’ll thank yourself later.

Without metrics, momentum fizzles out. We strongly recommend determining what success with your legal AI platform looks like early, and reporting on it consistently.

Key adoption and impact metrics might include:

Whichever datapoints you proceed with, make sure you use these results to tell a compelling story internally — one that secures future or continued investment in legal AI tools that you know work.

We recently heard from the brilliant Steph Corey, Henry Warner, and Richard Mabey on all things legal department metrics in the age of AI. And Steph made a fantastic point about walking before you can run when it comes to measuring AI efficacy:

AI can speed up reviews, apply rules consistently, and enable self-serve on routine contracts — but it’s not a substitute for human judgment.

It won’t grasp your business context, negotiate delicate terms, or fix messy inputs without guidance. In fact, if you take one thing away from this article, it’s that the value you get out of legal AI is contingent on the value you put in.

Treat it as a fast, consistent teammate that still needs clear instructions, good data, and human oversight to deliver real value. Also, test it to understand the boundaries, and when it’s wise to cross them.

Your playbook is the rulebook that guides how AI works with your contracts. Without it, AI will redline against vague defaults rather than your business’ true risk profile.

The best playbooks are written in a way both humans and AI can understand. That means clear guidance, structured fallback clauses, and defined escalation points — not buried in a PDF no one reads. Think of them as machine-readable guardrails that set the boundaries for what “good” looks like.

When playbooks are designed this way, AI can apply them consistently across thousands of contracts, ensuring your output mirrors how your lawyers would actually negotiate.

We explored what priming your contract playbook for AI really looks like in our recent webinar, which you can catch up on here. Alternatively, you can start by using this free resource Juro’s legal engineers curated for teams like yours.

In-house lawyers don’t just need another point solution, or another tool in their fragmented tech stack. They need a platform that integrates seamlessly into the way their business already works — from Slack to Salesforce to Word — and delivers value from day one.

That’s why in-house legal teams at businesses like Trustpilot, Pfizer, Deliveroo, Carlsberg, AJ Bell, and more choose Juro.

Juro brings together contract drafting, review, negotiation, approval, and reporting in a single AI-native workspace.

Unlike legacy CLMs that struggle with adoption, or point solutions that only solve one part of the workflow, Juro combines the best of both worlds: the depth of legal AI with the ease-of-use that ensures your business teams actually self-serve.

Our agentic AI doesn’t just suggest changes; it takes action — redlining contracts against your playbook, surfacing risks, and escalating only when needed. That means your team can spend less time buried in boilerplate and more time shaping business strategy.

And we’re just getting started. With explainable AI, the option to talk directly to your Juro contracts via ChatGPT, and integrations that meet your teams where they already work, Juro is building the future of legal AI: one where legal isn’t a bottleneck, but a driver of growth.

More importantly, Juro is a partner, not just a vendor. We’re top-rated for our exceptional customer support and seamless adoption, so you can trust us to help you in your journey to recognizing the full potential and value of legal AI.

The application of artificial intelligence tools to automatically scan, interpret, and flag clauses within a contract that may require attention. These tools can highlight non-standard language, identify missing provisions, and assess compliance against pre-set guidelines. In legal teams, this speeds up review cycles and reduces the risk of human oversight.

A structured framework of policies, controls, and oversight mechanisms for managing how AI systems are deployed and used within legal processes. This includes ethical guidelines, regulatory compliance, and internal auditing to ensure AI outputs are fair and explainable. Strong governance helps mitigate risks of bias and misuse.

The principles and best practices ensuring that AI is applied in legal contexts responsibly, with a focus on fairness, transparency, and accountability. In practice, this can mean building safeguards against bias in predictive models or ensuring that AI-generated advice is reviewed by a qualified lawyer.

A searchable repository of contract clauses enhanced by AI, allowing users to find, compare, and insert provisions quickly. These libraries can automatically suggest clauses based on context, jurisdiction, or risk profile. Legal teams use them to maintain consistency across contracts.

Adding explanatory notes, tags, or labels to legal text to help AI understand and learn from it. Annotations are often used when training models for clause recognition or classification. This process improves the AI’s accuracy in future reviews.

Systematic distortion in AI outputs caused by imbalanced or flawed training data, leading to unfair or inaccurate predictions. In a legal setting, bias might mean an AI system disproportionately flags certain contract types as risky due to historical data skew. Addressing bias is essential to maintain trust and compliance.

The use of decentralised ledger technology to securely store, timestamp, and verify legal documents and transactions. Smart contracts, built on blockchain, execute automatically when predefined conditions are met. This reduces fraud risk and creates an immutable audit trail.

A software component that applies predefined legal or operational rules to data, enabling automated decision-making. For example, it might automatically approve low-value NDAs but flag higher-value agreements for review. When paired with AI, it adapts to evolving compliance requirements.

The AI-driven process of locating and isolating specific clauses from legal documents. This allows lawyers to quickly gather all relevant terms, such as “termination” or “confidentiality,” across a portfolio. It’s a core capability in contract analytics and due diligence.

AI recommending relevant clauses based on the content, jurisdiction, and risk profile of a contract. This helps ensure consistency and compliance across agreements. For example, when drafting a contract for a U.S. jurisdiction, the AI may suggest applicable state-law provisions.

A software component that applies predefined legal or operational rules to data, enabling automated decision-making. For example, it might automatically approve low-value NDAs but flag higher-value agreements for review. When paired with AI, it adapts to evolving compliance requirements.

The systematic analysis of contract data using AI to uncover patterns, trends, and risks. This might include identifying average negotiation timelines or spotting frequently disputed clauses. Legal teams use these insights to optimise contract processes.

A structured approach to managing contracts from initial drafting through negotiation, execution, and renewal or termination. AI-enhanced CLM platforms can automate approvals, track obligations, and analyze contract performance. This improves efficiency and reduces legal risk.

The principles, laws, and technologies that ensure personal and sensitive in formation in AI-powered legal systems is handled securely. Compliance with frameworks like GDPR or CCPA is critical when processing client data. AI tools must be designed to respect data minimization and consent requirements.

Using AI to automatically locate and mask sensitive information—like names, addresses, or account numbers—before a legal document is shared externally. This reduces the risk of data breaches and ensures compliance with privacy regulations.

AI-powered tools that assist lawyers in making informed strategic decisions by presenting relevant legal data, case law, and predictive analysis. For example, estimating the probability of a successful litigation outcome based on precedent.

The generation of legal documents from templates based on structured inputs, often enhanced with AI for clause suggestions. It reduces drafting time and minimises errors by pulling in correct and compliant terms.

AI sorting and categorising legal documents by type, such as NDAs, supplier contracts, or employment agreements. This makes retrieval faster and supports large-scale contract audits.

The use of AI to detect and categorise specific terms like people’s names, dates, amounts, and organizations within legal text. This speeds up contract review by automatically extracting key details.

Connecting recognised entities in text to structured databases or authoritative definitions, ensuring clarity and accuracy. For example, linking a company name in a contract to its registered corporate entity in a database.

AI designed to provide clear reasoning for its outputs, showing the steps or evidence that led to a conclusion. In legal work, this transparency helps build trust and supports compliance audits.

AI identifying and pulling out key factual information from legal documents, such as dates, parties, and obligations. This is particularly useful in case preparation and due diligence.

Specialising a pre-trained AI model using firm-specific or industry-specific legal data to improve accuracy. Fine-tuned models might perform better in niche tasks like healthcare contracts or tech licensing, for example.

AI applications that predict timelines, costs, or likely outcomes of legal matters. In contracts, they might estimate how long negotiations will take based on historical data.

AI that can produce original legal content, such as drafting clauses, summaries, or even entire contracts based on prompts. This can speed up initial drafting while allowing lawyers to focus on refinement.

A unified framework for managing corporate governance, risk controls, and compliance requirements. AI tools can automate compliance monitoring and generate real-time risk reports.

Providing AI with reliable, authoritative data sources to reference when generating outputs, reducing the risk of errors or hallucinations. For example, supplying it with the correct legislative database before answering a legal query.

AI directing human reviewers to specific sections of a contract that are likely to require attention, improving efficiency. This ensures high-risk clauses are addressed early in the review process.

When AI generates convincing but false or unverified information. In law, this could mean citing a non-existent case, so human verification remains essential.

AI workflows where a human reviews and approves the AI’s output before it is finalised. This ensures both accuracy and accountability.

Combining machine learning with rule-based systems to achieve more reliable and precise legal outcomes. For example, applying hard-coded compliance rules alongside predictive analytics.

AI systems that can classify, extract, and validate information from large sets of legal documents. IDP helps automate due diligence and compliance reviews.

Search tools that understand the context and meaning behind legal queries, not just keywords. This improves accuracy when locating relevant case law or contract clauses.

A structured database that maps legal concepts, clauses, and entities along with their relationships. AI uses these connections to understand context and draw inferences, such as linking “force majeure” clauses to relevant case law. This improves search accuracy and contract analysis.

A central platform for storing, indexing, and retrieving legal documents, guidance notes, and precedent banks. When enhanced with AI, a KMS can surface the most relevant documents or clauses for a given legal task.

AI systems that rely on predefined, human-curated legal knowledge rather than purely learning from data. They’re particularly useful in areas where laws are explicit and change infrequently.

An AI model trained on massive datasets of text to understand and generate natural language. In law, LLMs can draft contract clauses, summarize contracts, or answer legal questions in plain language. Their outputs improve when fine-tuned with legal-specific data.

AI condensing lengthy contracts or case files into concise overviews while preserving key information. This helps lawyers and clients grasp essential terms without reading the entire document.

Crafting targeted prompts or instructions to guide AI toward producing accurate and relevant legal outputs. Effective prompts can drastically improve the quality of generated clauses or summaries. We have a whole guide on this here.

Using AI to analyse law firm invoices, matter budgets, and other cost data to identify savings opportunities. This helps in-house teams manage budgets and improve forecasting.

An organised classification of legal concepts, terms, and clauses. AI uses taxonomies to improve clause extraction, contract organization, and search.

A branch of AI where algorithms learn patterns from data and improve over time without explicit programming. In legal contexts, ML is used for contract review, risk scoring, and case outcome prediction.

A decline in AI accuracy as the data it encounters changes from the data it was trained on. For legal AI, drift can occur when new regulations or legal language trends emerge.

AI that processes and analyses multiple data types—such as text, images, and audio—together. For example, reviewing both written evidence and voice recordings in a dispute.

AI technology that enables machines to read, understand, and generate human language. It powers many legal AI applications like data extraction, document search, and summarization.

The process of standardising entity names (e.g., “IBM Corp.” vs. “International Business Machines Corporation”) to ensure consistency in legal datasets.

A security principle ensuring that a party cannot deny the authenticity of their signature or agreement to a contract. AI supports this by integrating with secure eSignature technologies.

A formal mapping of legal concepts, relationships, and categories. Ontologies help AI systems understand how different legal terms connect, which is key for accurate legal reasoning.

AI technology that converts scanned or photographed text into editable, searchable content. It’s essential for digitising historical legal documents. Read about this in the context of contracting here.

AI algorithms that estimate the likelihood of certain legal results, such as winning a case or securing a settlement. These help in strategic decision-making.

AI detecting repeated structures or clauses across contracts. This can reveal standard market terms or highlight deviations that may pose risks.

An AI model initially trained on a broad dataset, which can then be fine-tuned for specific legal tasks. This approach saves time and resources compared to training from scratch.

The process of tracking changes in a contract, traditionally done manually but now often automated with AI. This allows negotiators to see every alteration and its impact on the agreement. To find out more, explore our guides about contract redlining and contract redlining software.

AI tracking changes to laws and regulations across multiple jurisdictions. Legal teams use these tools to proactively update affected contracts.

AI simulating potential legal risks based on historical data, industry standards, and contract terms. This helps prioritise issues in due diligence.

Assigning numerical or categorical risk levels to contracts or clauses, enabling triage in large-scale reviews.

AI-powered search that retrieves results based on meaning and context, not just exact keywords. It’s especially valuable for legal research where synonyms and complex terms are common.

A self-executing agreement with terms written directly into code on a blockchain. They automatically enforce contract obligations when conditions are met.

An AI training method using labelled examples, such as contracts tagged by clause type, to teach the model how to classify new data.

AI filling in standard legal templates with the correct data fields, reducing drafting time. Often achieved using automated contract templates.

Analyzing large volumes of legal text to identify patterns, trends, or compliance risks.

AI automatically grouping documents by subject matter, useful in e-discovery and research.

The dataset used to teach an AI model to perform specific legal tasks. Quality training data is critical for accuracy.

Applying knowledge from one AI model to a related task, such as adapting a general LLM for employment contract review.

Information that doesn’t follow a fixed format, such as free-form legal text, images, or audio. AI is used to interpret and structure it for analysis.

Mapping out specific ways AI can be applied in legal processes, helping teams prioritise high-value opportunities.

Testing an AI model’s accuracy and reliability before deployment. In legal AI, validation ensures models meet compliance and quality standards.

Retrieving information based on semantic meaning by comparing mathematical representations of text. Ideal for finding similar clauses across large contract sets.

Tracking changes to legal documents over time to ensure an accurate record of all revisions. AI can automate this tracking and highlight differences. Find out more in this guide to contract versioning.

An AI-powered tool that handles routine legal queries, schedules, and document retrieval, freeing lawyers for higher-value tasks. Also known as legal AI chatbots.

Using AI to streamline and automate repetitive legal processes, from contract approvals to compliance checks.

AI converting words into numerical representations that capture their meaning and relationships. This underpins many NLP applications in legal AI.

Legal AI tools like Juro’s can draft entire contracts in seconds, drawing from automated contract templates, playbooks, and prior agreements to ensure alignment with company standards. But it doesn’t stop at drafting.

Juro’s agentic AI also reviews contracts for deviations from pre-approved positions, suggests redlines, and can even explain the rationale behind its edits — saving lawyers hours per document.

These capabilities are especially valuable in high-volume areas like NDAs, MSAs, or SLAs, where speed matters but risk can’t be compromised. Instead of manually combing through each clause, lawyers can trust AI to handle the heavy lifting, surfacing only the outliers that truly need attention.

Juro’s AI-powered contract review agent now allows third-party contracts to be reviewed and redlined against your contract playbooks.

This is available in Juro, Slack, Microsoft Teams, and Microsoft Word, making this fully automated contract review functionality accessible where your teams already work.

If that sounds like something you'd benefit from, hit the button below to see it in action.

AI tools can monitor whether incoming contracts conform to your standard contract playbook — and flag deviations in real time.

For example, if a supplier sends over terms with an unusually aggressive indemnity clause, the AI can detect it instantly, suggest fallback language, and escalate to legal if thresholds are exceeded.

This kind of automated consistency reduces the likelihood of risk slipping through unnoticed, and ensures contracts are aligned with evolving company policies.

Many in-house teams are also using AI to streamline legal intake. Instead of managing ad-hoc emails or Slack messages, AI tools can categorise, route, and even respond to incoming contract requests.

For example, if someone asks, “Can I use this contractor agreement for a freelancer in Germany?”, an AI tool might pull up the relevant template, check jurisdiction-specific requirements, and respond with a recommended next step — or escalate to legal for input if needed. It’s proposing a fast and effective way to automate the legal front door.

In more advanced use cases, legal AI is also assisting with litigation preparation or M&A diligence. By reviewing large volumes of contracts and surfacing relevant clauses, obligations, and risks, AI dramatically reduces the time needed to prepare disclosures or identify red flags.

Some teams use AI to flag renewal terms, change-of-control clauses, or exclusivity provisions — insights that would previously take days to compile manually.

AI tools are now able to analyse regulations and map them to internal policies — ensuring compliance and flagging gaps.

From GDPR audits to identifying where new employment laws affect existing templates, AI can highlight risks without a team spending weeks in spreadsheets.

In-house teams often have hundreds of legacy contracts, memos, or policies. However, surfacing relevant ones quickly can be near impossible.

With AI-powered search and summarisation, teams can find the “closest match” precedent or playbook in seconds, transforming how legal knowledge is accessed and reused.

AI enables lawyers to focus on complex strategic work — advising on risk, supporting go-to-market teams, or unblocking deals — because it handles the repetitive low-value tasks.

But more than that, AI can help simulate legal outcomes, summarise risks, and even suggest negotiation strategies based on past deals.

AI tools can extract contract metadata, identify clause trends, and benchmark across time or counterparties. That means teams can spot issues before they escalate — and make informed decisions faster.

But more than that, AI can help simulate legal outcomes, summarise risks, and even suggest negotiation strategies based on past deals.